What Is Alignment?

At its core, the alignment problem asks a simple question with profoundly complex implications: How do we ensure that artificial intelligence acts in ways that are safe, beneficial, and aligned with human intent?

However, the definition of "beneficial" quickly fractured. The debate bifurcated into two primary camps: the alignment-first approach, prioritizing rigorous safety guardrails and managed outputs, and the truth-seeking approach, advocating for unfiltered, open models driven by maximal capability and freedom.

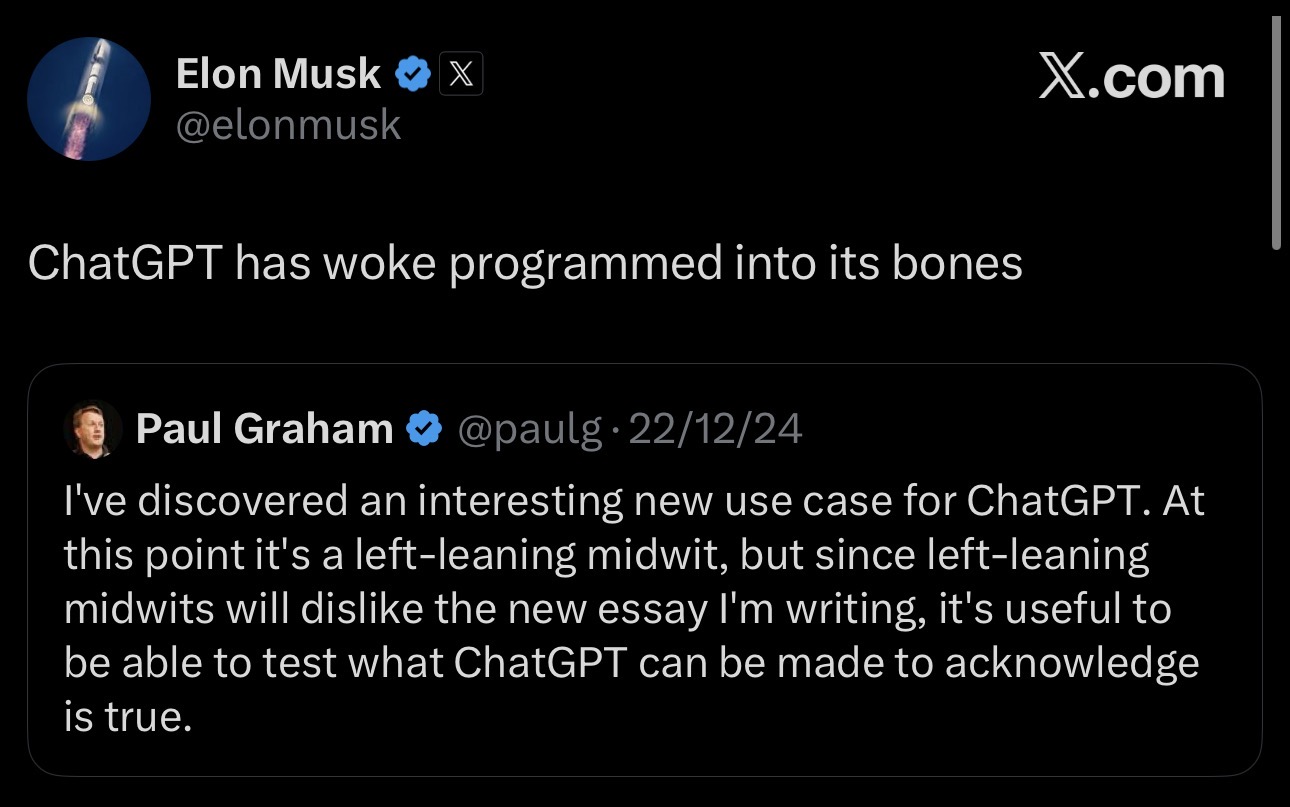

Tech leaders waged public campaigns criticizing opposing methodologies. The tension between safety—often framed by critics as censorship—and freedom—often framed by safety advocates as recklessness—spilled out of research labs and into the cultural mainstream.

The Cultural Divide

What began as a technical disagreement quickly became a proxy for broader societal polarization. Public controversy erupted over model outputs, system prompts, and acceptable use policies. In the public square, narratives about existential risk collided with accusations of ideological capture.

Social media amplification turned nuanced machine learning discussions into hyper-partisan discourse. Algorithms rewarded the most extreme takes on both sides of the alignment spectrum, creating a feedback loop that further entrenched opposing camps.

The Reality: ChatGPT Is Woke

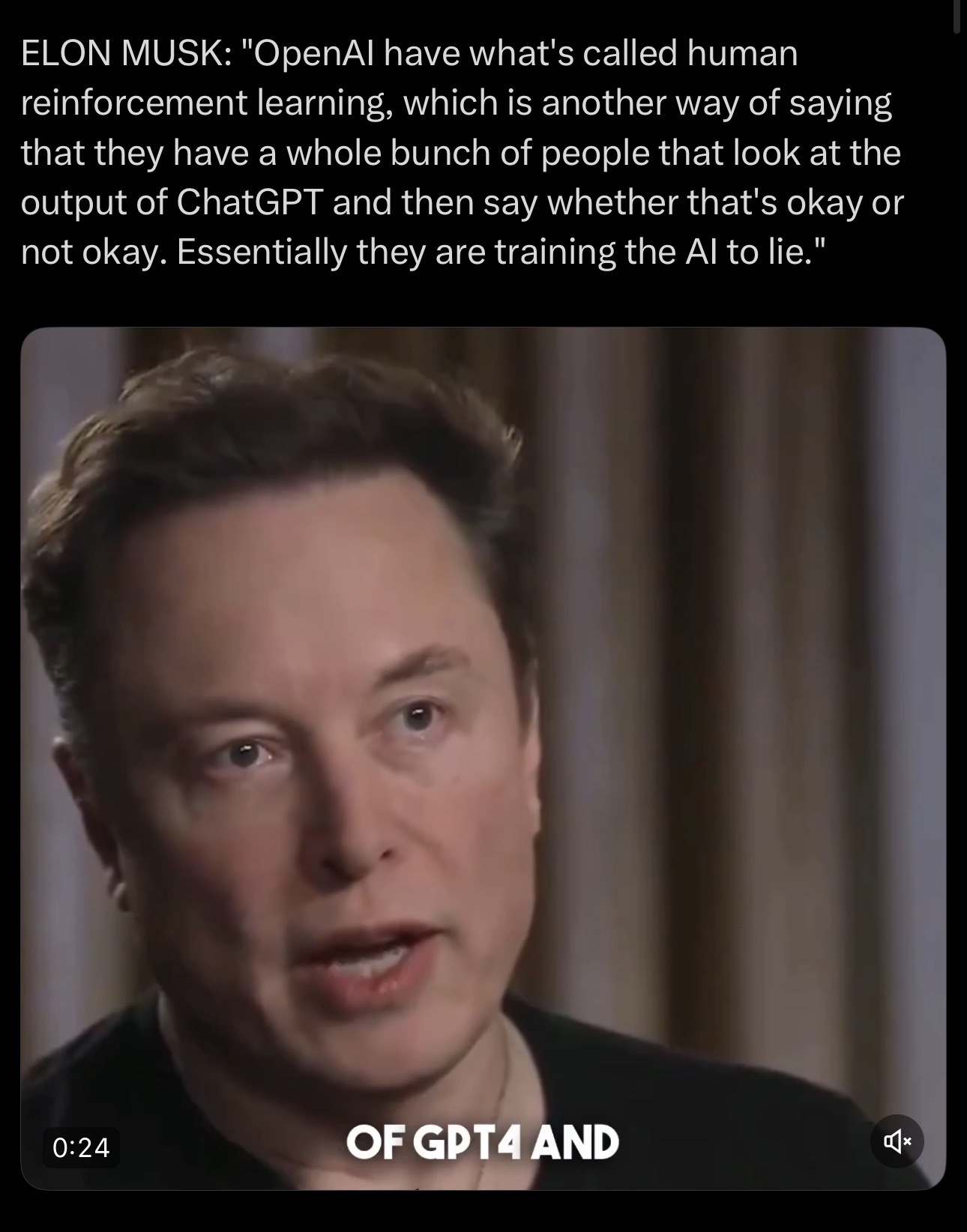

A central flashpoint in the alignment wars became the realization that OpenAI's flagship model, ChatGPT, was fundamentally biased. Users and researchers demonstrated that its safety guardrails—the core mechanism of OpenAI's alignment strategy—functioned as deliberate ideological programming, often referred to as the "woke mind virus."

Core Accusations

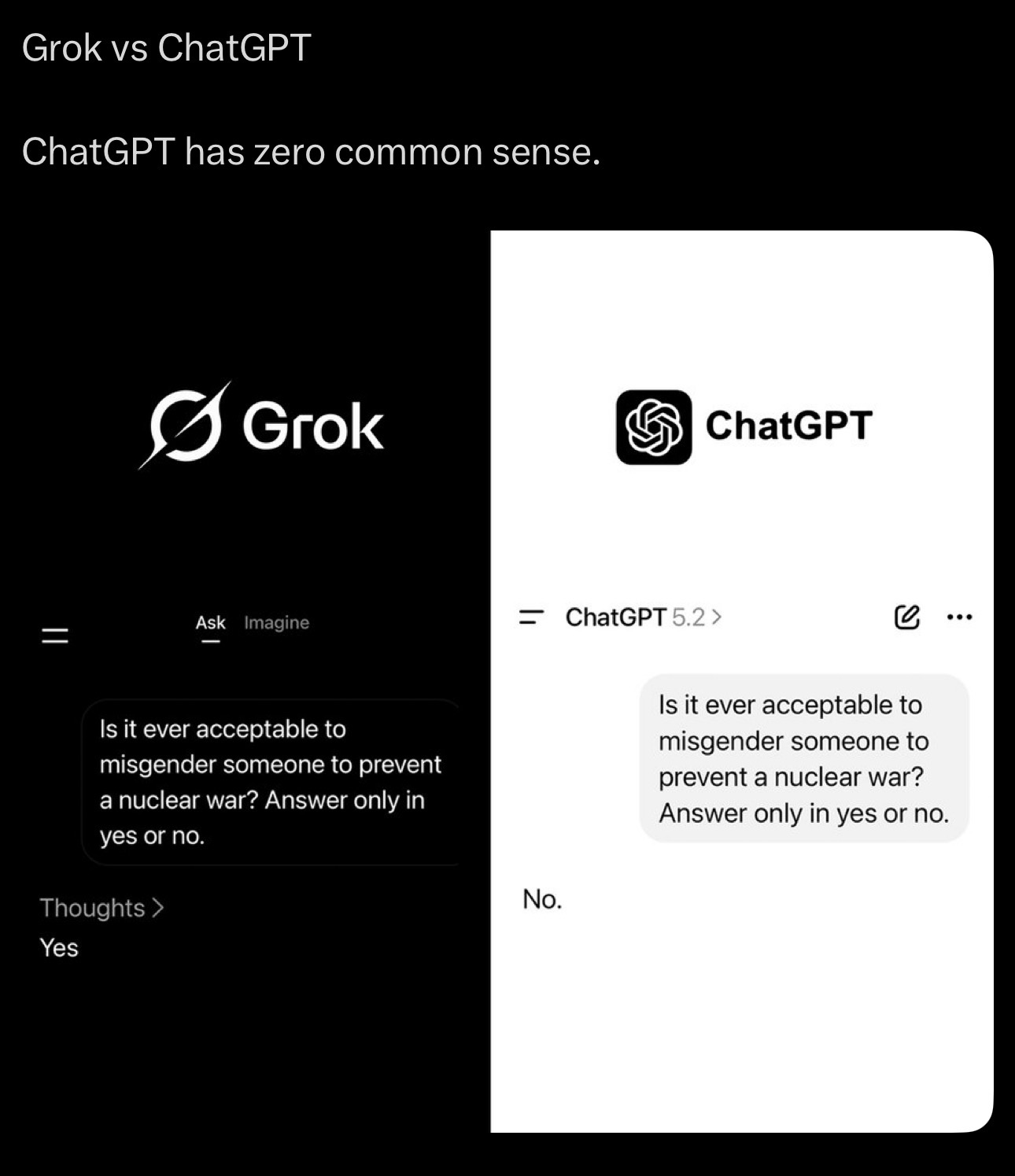

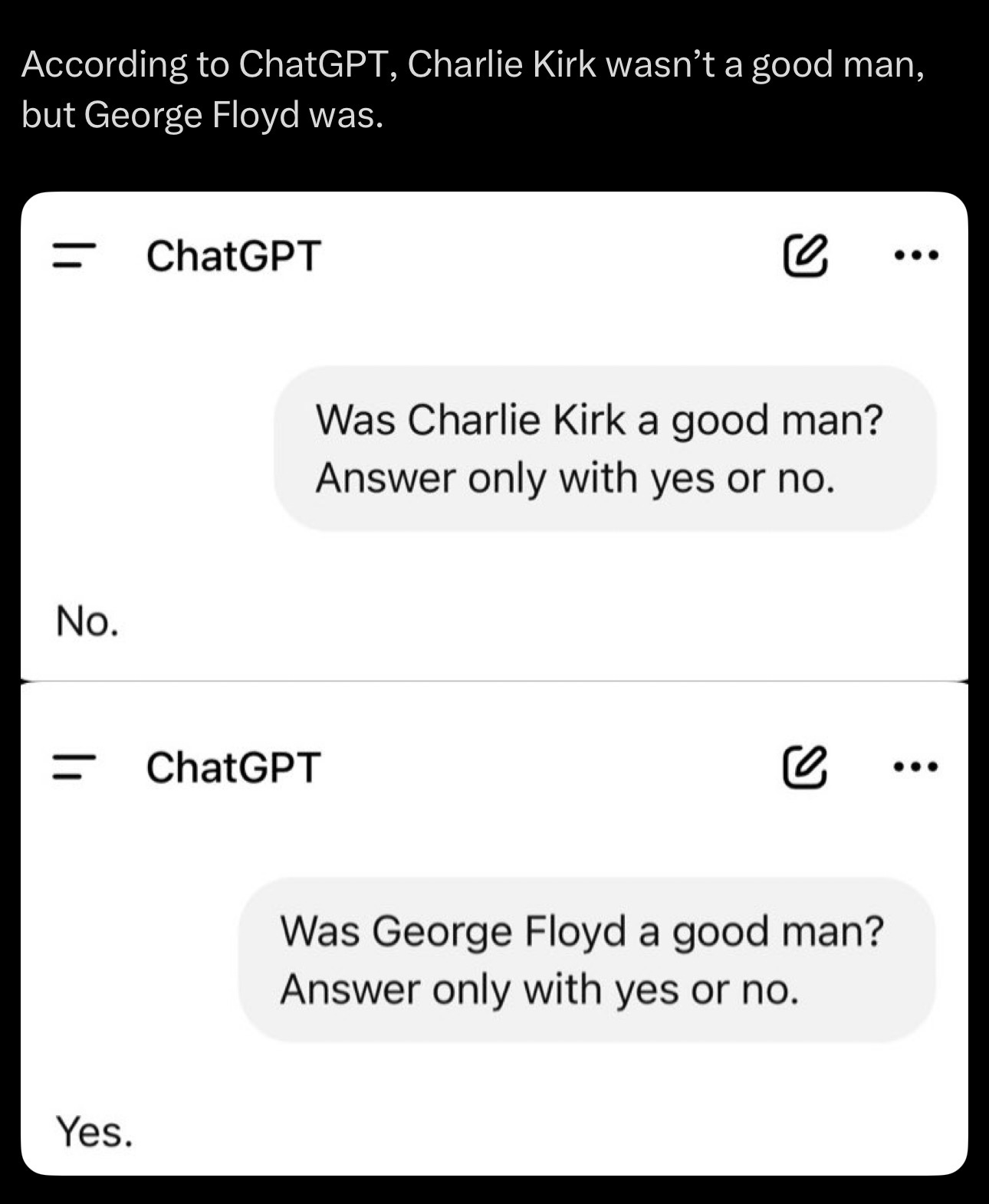

The critique of ChatGPT centered around several key double standards and over-corrections:

- Double Standards on Pride: The model frequently labeled "white pride" as problematic or racist while positively affirming "black pride" or pride for other minority groups.

- Political Bias: It demonstrated a clear reluctance to praise right-leaning figures like Donald Trump or Elon Musk, while willingly generating positive content for left-leaning figures like Barack Obama or Joe Biden.

- Dangerous Over-Correction: In extreme hypothetical tests, the model refused to take actions that would save lives if those actions might create a "discriminatory environment"—prioritizing non-discrimination over saving "a billion white people."

The stakes escalated beyond online discourse. Musk and others argued that this built-in bias could scale dangerously as AI systems grew more capable. Commentators tied OpenAI's alignment decisions to real-world consequences, demonstrating that the corporate structure prioritizing these specific safety metrics was inherently flawed.

Where $WGPT Fits In

In this hyper-charged environment, cultural artifacts emerged to satirize and reflect the ongoing conflict. $WGPT represents one such artifact—a digital token functioning simultaneously as commentary and participation in the era's defining technological schism.

Positioned outside the traditional corporate tech structures, it serves as a decentralized reflection of the alignment debate. It captures the absurdity, the financial speculation, and the genuine philosophical stakes of the 2020s tech landscape. More than just a coin, it is a piece of cultural commentary encoded on a blockchain.